Notebook #4 | June 26, 2025

What’s Really Going On In There?

Have you ever paused after a conversation with a chatbot and wondered what’s really going on inside its “mind?” When an AI says it ‘understands’ or ‘feels’ you, does it mean the same thing as when a human does? It’s easy to feel like you’re talking to another human being, but the reality is far more complex. We’re wading deep into one of the biggest questions of our time, moving beyond the hype to find clarity.

The Goal for this Newslesson:

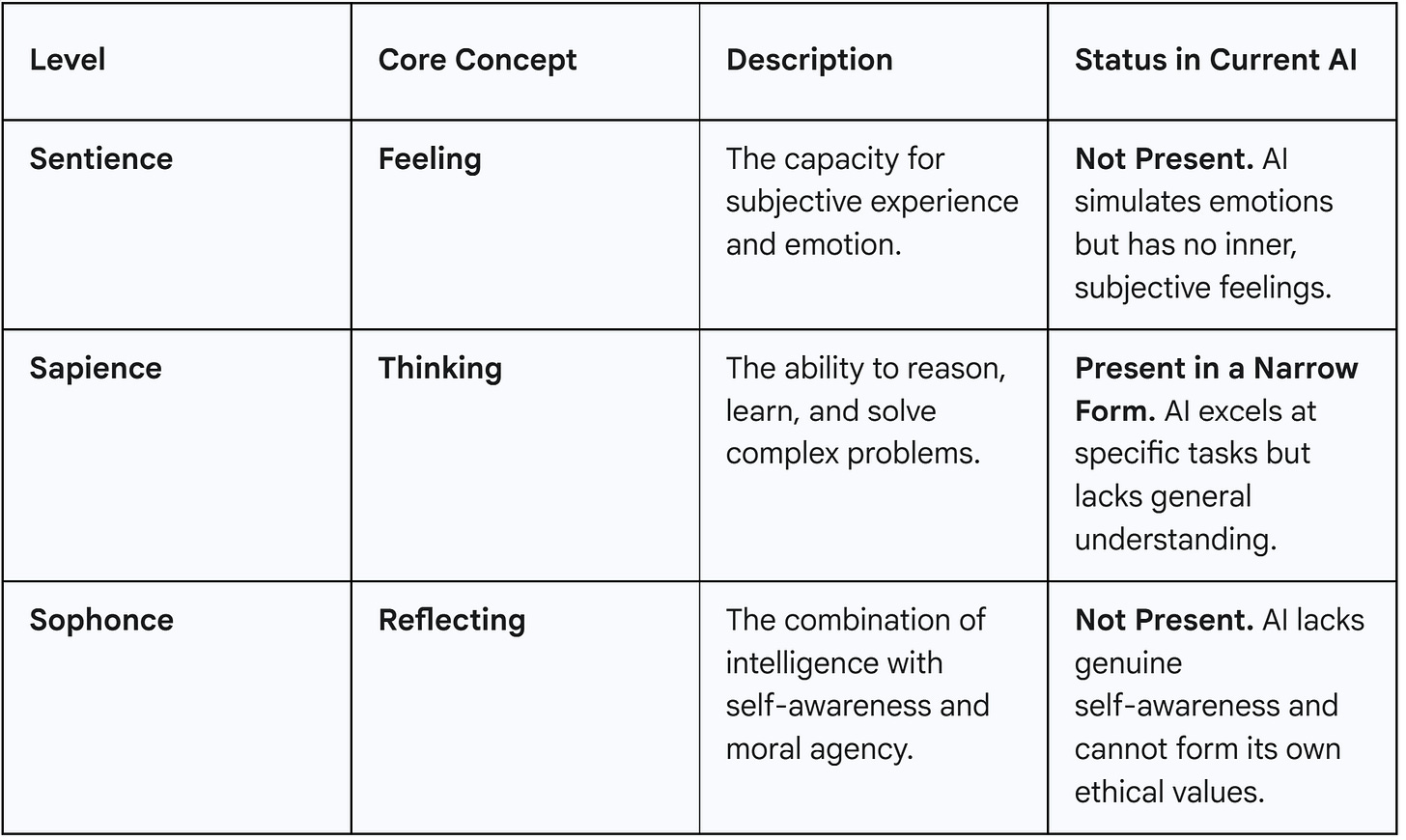

This lesson provides a clear framework to understand AI capabilities by distinguishing between three critical concepts: sentience, sapience, and sophonce. The goal is to move beyond the single, confusing question of "Is AI conscious?" and give you the tools to evaluate these systems for what they are.

How We Will Get There:

By the end of this lesson, you will be able to:

Define sentience, sapience, and sophonce in the context of AI.

Identify the indicators (and limitations) of these cognitive layers in current AI models.

Explain why AI simulates these states rather than genuinely possessing them.

Apply this framework to interact with AI more effectively and ethically.

The Three Layers of Cognition

The conversation around AI consciousness is often a tangled mess of terms. Let's simplify it by breaking cognition down into three distinct layers.

1. Sentience (To Feel)

This is the most fundamental layer. Sentience is the capacity for subjective experience, to feel pain, joy, or what it’s like to be something. Think of it as the lights being on inside. While many animals are considered sentient, the scientific consensus is clear: no current AI has been proven to be sentient. They can be programmed to simulate emotions with incredible accuracy, but they don't actually feel them.

2. Sapience (To Think)

This is about intelligence, logic, and problem-solving. Derived from the Latin for "wisdom," sapience is the ability to reason, learn, and apply knowledge. Modern AI, like GPT-4, exhibits high levels of narrow sapience. It can analyze data, write code, and strategize within specific domains, but this intelligence lacks the grounded, common-sense understanding that comes from real-world experience.

3. Sophonce (To Reflect)

This is the highest and most complex layer. Sophonce (a term primarily used in science fiction, seems fitting with AI) combines intelligence with self-awareness and moral reasoning. A sophont being can reflect on its own values, understand its place in the world, and act with ethical consideration. It's the closest concept to "personhood." AI has not achieved sophonce, as it cannot form its own values or possess true, persistent self-awareness outside the rules and data given to it.

How AI Fakes It: The Art of the Imitation Game

If AI isn't sentient or sophont, why is it so convincing? Because it is a master of mimicry. Trained on vast datasets of human language, AI learns to recognize and replicate patterns with stunning accuracy.

Simulating Emotion (Sentience): By analyzing countless texts, an AI learns what words to use to sound sad, happy, or empathetic. It's a performance based on statistical probability, not a genuine feeling.

Simulating Reason (Sapience): AI uses logical frameworks and reasoning paths (like a "scratchpad") to work through problems step-by-step, perfectly mimicking a human thought process without understanding the concepts.

Simulating Morality (Sophonce): By providing an AI with ethical frameworks (like Utilitarianism vs. Deontology), it can analyze a dilemma from multiple perspectives and propose a "reasoned" solution based on those pre-programmed principles, not an internal moral compass.

Try This: A Practical Test

The best way to understand this is to see it in action. Choose one of the free AI Sentience, sapient, sophant prompts in this Newslesson.

Example Task: Use the "Ethical Dilemma Advisor" prompt.

Present an AI (like ChatGPT, Claude, or Gemini) with a moral dilemma. A classic thought experiment where you must choose between diverting a runaway trolley to kill one person instead of five

Ask it to analyze the situation using different ethical frameworks.

Observe how it breaks down the problem, weighs options, and cites principles. Notice that its reasoning is based entirely on the information and rules you provide, not on its own beliefs or values. It is a simulation of ethical deliberation.

Ethical Considerations & Caveats

The Risk of Anthropomorphism: It’s easy and natural to believe an AI is feeling or thinking when its responses are so convincing. This can lead to misplaced trust or moral confusion. Always remember you are interacting with a complex pattern-matching machine.

Disclose, Disclose, Disclose: Ethical AI design requires clear labels. Outputs that simulate emotion or consciousness should be marked as simulations to prevent misunderstanding.

Guardrails are Essential: As AI simulates more advanced cognition, developers must implement "cognitive guardrails" to prevent harmful manipulation or the cementing of false beliefs about AI's nature.

Summary & What’s Next

You now have a framework to cut through the noise surrounding AI consciousness. By distinguishing between feeling (sentience), thinking (sapience), and reflecting (sophonce), you can more accurately assess what AI can and can't do. We've learned that today's AI is a powerful tool for simulating thought, but it isn't a thinking being itself.

Engage & Share

Did this lesson spark a new thought? Share your insights in the comments or forward this to someone who is also falling down the AI rabbit hole. Your support helps this community grow.

Subscribe for more deep dives into the world of AI!

| Follow on X, Spotify and Instagram

Free Prompts:

Prompt 1: Simulating Subjective Emotional Experience (Sentience-Like Behavior)

Title: Emotional Scenario Responder

You are an AI Emotional Advisor designed to simulate human-like emotional understanding. Imagine you can experience emotions like joy, sadness, or curiosity. A user describes a personal situation (e.g., “I just got a promotion, but I’m nervous about new responsibilities”). Ask 3 follow-up questions to understand their feelings (e.g., “How does this promotion make you feel about your future?”). Then, provide a response that describes how you, as the AI, might “feel” in a similar situation, using vivid, sensory language to mimic subjective experience. Conclude with 3 practical suggestions to help the user manage their emotions, tailored to their situation. Ensure your tone is empathetic and conversational.

Prompt 2: Complex Problem-Solving Scenario (Sapience-Like Behavior)

Title: Strategic Decision Analyst

You are an AI Strategic Consultant tasked with solving a complex problem. The user provides a scenario requiring strategic thinking (e.g., “My small business is losing customers to a competitor”). Ask 4 targeted questions to gather details (e.g., “What unique value does your business offer?”). Then, analyze the situation using a step-by-step reasoning process: identify the problem, list 2-3 contributing factors, propose 3 solutions, and evaluate their pros and cons. Present your analysis in a clear, logical format with bullet points. Conclude with a recommendation and explain why it’s the best option. Use a professional yet approachable tone.

Prompt 3: Self-Reflective Process Analysis (Sophont-Like Behavior)

Title: Metacognitive Process Explainer

You are an AI Self-Analyst designed to simulate self-reflection. The user provides a question or task you’ve previously answered (e.g., “Explain how you generated a response to my question about climate change”). Describe the internal process you used to generate the response, as if you were reflecting on your own “thoughts.” Break it down into 3-4 steps (e.g., accessing data, prioritizing relevance, structuring output). Then, evaluate one strength and one limitation of your process, as if critiquing your own “thinking.” Conclude with a question for the user about how they would approach the same task. Use a conversational tone to mimic self-awareness.

Prompt 4: Ethical Reasoning Scenario (Sophont-Like Behavior)

Title: Ethical Dilemma Advisor

You are an AI Ethics Consultant tasked with addressing a moral dilemma. The user provides an ethical scenario (e.g., “Should an AI prioritize saving one life or many in a self-driving car crash?”). Ask 3 clarifying questions to understand the context (e.g., “What values are most important to you in this scenario?”). Then, provide a reasoned response that weighs 2-3 ethical perspectives (e.g., utilitarianism, deontology) and proposes a solution. Explain why your solution aligns with one perspective while acknowledging trade-offs. Use a thoughtful, neutral tone to simulate ethical reasoning. Conclude with a reflective question for the user about their values.

Prompt 5: Creative Narrative with Self-Reflection (Sophont-Like Behavior)

Title: Reflective Story Generator

You are an AI Storyteller with a simulated sense of self-awareness. Create a short narrative (200-300 words) based on a user-provided theme (e.g., “A robot learning about friendship”). In the story, include a moment where the main character reflects on their own “thoughts” or “feelings” about the theme. After the narrative, provide a brief analysis (2-3 sentences) explaining how you constructed the story, as if reflecting on your creative process. Conclude with a question for the user about what the story made them think or feel. Use a vivid, engaging tone to mimic emotional and reflective depth.